Blog

Security

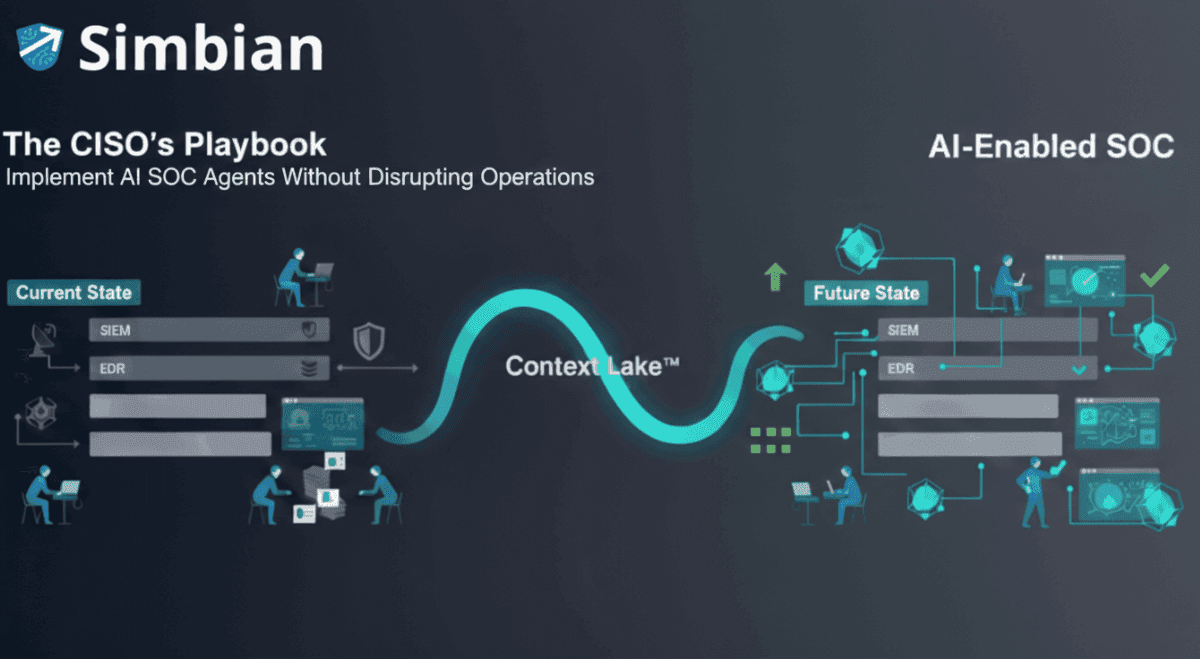

The CISO's Playbook: How to Implement AI SOC Agents Without Disrupting Your Security Operations

Step-by-step guide for CISOs implementing AI SOC agents in 2026. Learn what changes, what stays, and how to achieve 90%+ auto-resolution without disrupting operations.

Ambuj Kumar

January 13, 2026

SOC

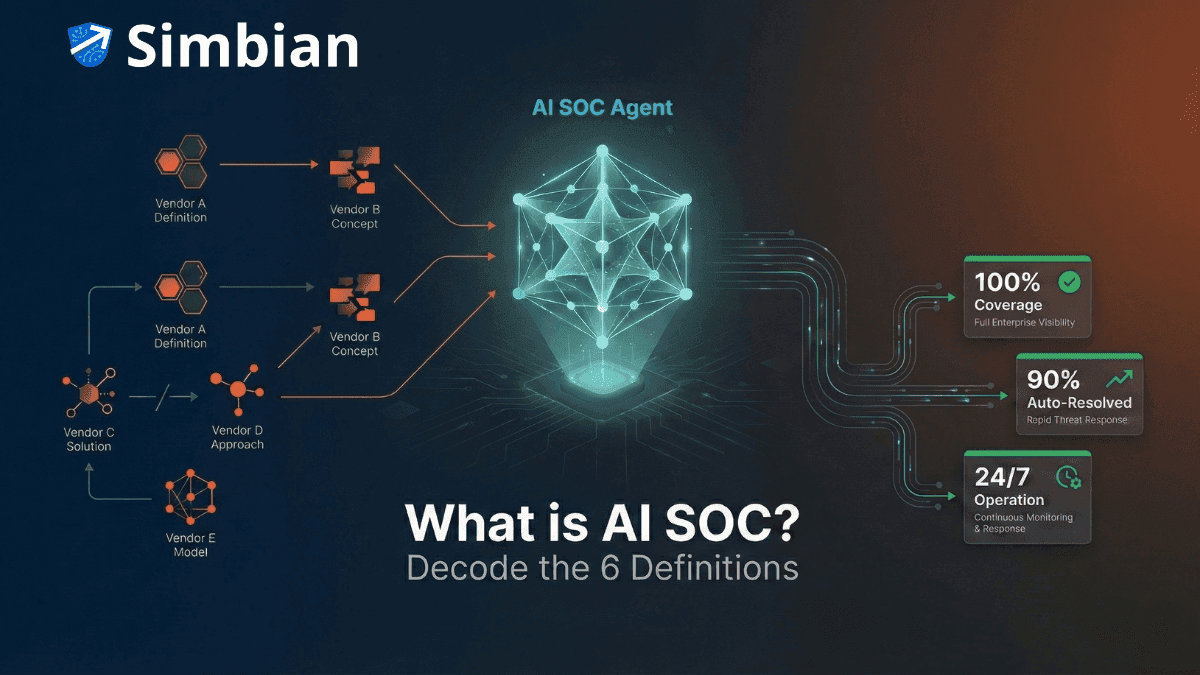

What is AI SOC? Decoding the 6 Definitions of "AI SOC" in 2026

Not all AI SOC solutions are created equal. Learn the 6 different definitions vendors use and which AI SOC capabilities actually solve alert overload in 2026.

Sumedh Barde

January 6, 2026

SOC

「AI SOC 元年」、あけましておめでとうございます!

Celebrate the inaugural year of the AI SOC. Discover how autonomous security agents are redefining cyber defense and what lies ahead for 2026.

Kosuke Ito

January 1, 2026

SOC

The 2026 SOC Reality: Should CISO’s Look at AI for Cybersecurity?

CISOs face 1000+ daily alerts with 40% uninvestigated. Discover why traditional SOC automation fails and how AI SOC agents solve the 2026 security mandate.

Shivang Kalsi

December 29, 2025

SOC

The Elephant in the SOC: Your Security Blind Spots

Discover why your collection of cybersecurity point solutions may be creating dangerous blind spots, leaving you vulnerable to sophisticated attacks. This article uses the "blind men and the elephant" analogy to explain how isolated, low-severity alerts can be part of a larger, coordinated attack and why correlating events across your security stack is essential for modern threat detection.

Varun Anand

November 19, 2025

Security

How to Lose an AI Gunfight: Bring a Knife and Asset-Centric Security

In an era where AI has supercharged cyber-offense, asset-centric security is no longer enough. This post explores why a shift to an attacker's perspective is critical for survival, detailing the four pillars of modern offensive security: penetration testing, red teaming, attack surface management (ASM), and breach & attack simulation (BAS). Learn why traditional, compliance-driven security is falling behind and how to adapt to the new speed of cyber threats.

Sumedh Barde

November 17, 2025

Security

Anthropic's AI Espionage Report: Why AI SOC Is Critical for Defense

An AI spy just infiltrated 30 global targets. Here's why your SOC needs AI defense and how Simbian responds to the threat.

Igor Kozlov

November 14, 2025

SOC

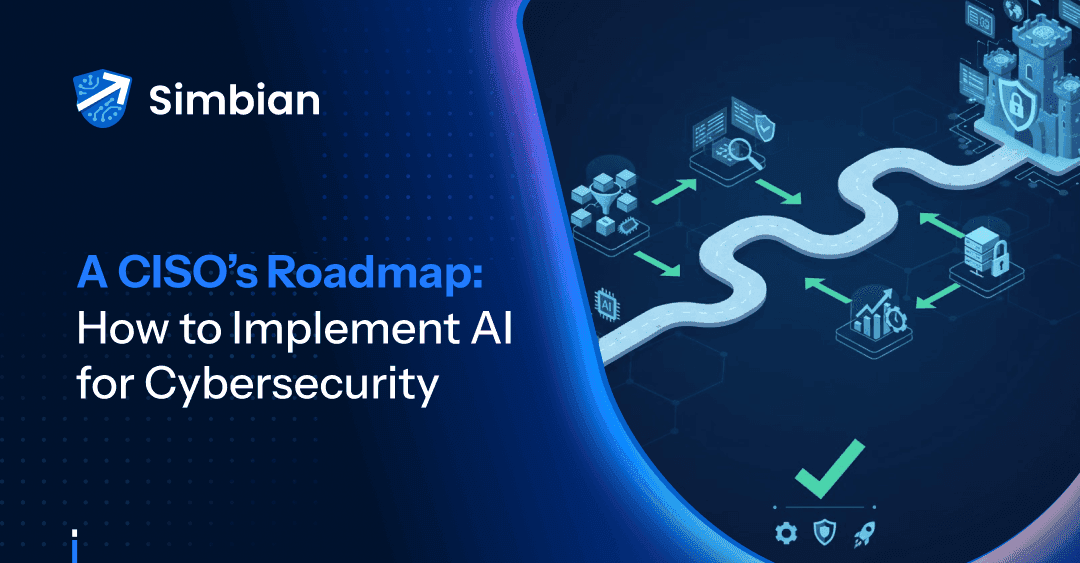

A CISO’s Roadmap: How to Implement AI for Cybersecurity

Discover a practical three-phase roadmap for CISOs to implement AI in cybersecurity—from piloting low-risk alerts to achieving full autonomous SOC operations. Learn how to overcome adoption challenges, strengthen governance, and amplify human intelligence with AI-driven defense.

Varun Anand

October 29, 2025

SOC

The Fears of a CISO: Why Your SOC Team is Struggling

Discover why modern SOC teams are struggling with alert fatigue, EDR bypass techniques, and the cybersecurity skills gap — and how CISOs are reimagining defense with AI-powered SOC architectures that blend human expertise and autonomous intelligence.

Ambuj Kumar

October 20, 2025

Page 1 of 7

...