Loading...

Loading...

We've all been hearing a lot about Large Language Models (LLMs) and their potential, but how do they stack up in the demanding world of a Security Operations Center (SOC)? We've been diving deep into this question, offering some fascinating, data-driven insights into the capabilities of AI in defensive cybersecurity.

For the first time ever, we've developed a comprehensive benchmarking framework specifically designed for AI SOC. What makes this different from existing cybersecurity benchmarks like CyberSecEval or CTIBench? The key lies in its realism and depth.

Forget generic scenarios. Our benchmark is built on the autonomous investigation of 100 full-kill chain scenarios that realistically mirror what human SOC analysts face every day. To achieve this, we created diverse, real-world-based attack scenarios with known ground truth of malicious activity, allowing AI agents to investigate and be assessed against a clear baseline. These scenarios are even based on historical behavior of well-known APT groups (like APT32, APT38, APT43) and cybercriminal organizations (Cobalt Group, Lapsus$), covering a wide range of MITRE ATT&CK™ Tactics and Techniques, with a focus on prevalent threats like ransomware and phishing.

To facilitate this rigorous testing, we leveraged our Simbian AI SOC agent. Our evaluation process is evidence-grounded and data-driven, always kicking off with a triggered alert realistically representing the way SOC analysts operate. The AI agent then needs to determine if the alert is a True or False Positive, find evidence of malicious activity (think "CTF flags" in a red teaming exercise), correctly interpret that evidence by answering security-contextual questions, and provide high-level overview of the attacker's activity. This evidence-based approach is critical for managing hallucinations, ensuring the LLM isn't just guessing but validating its reasoning.

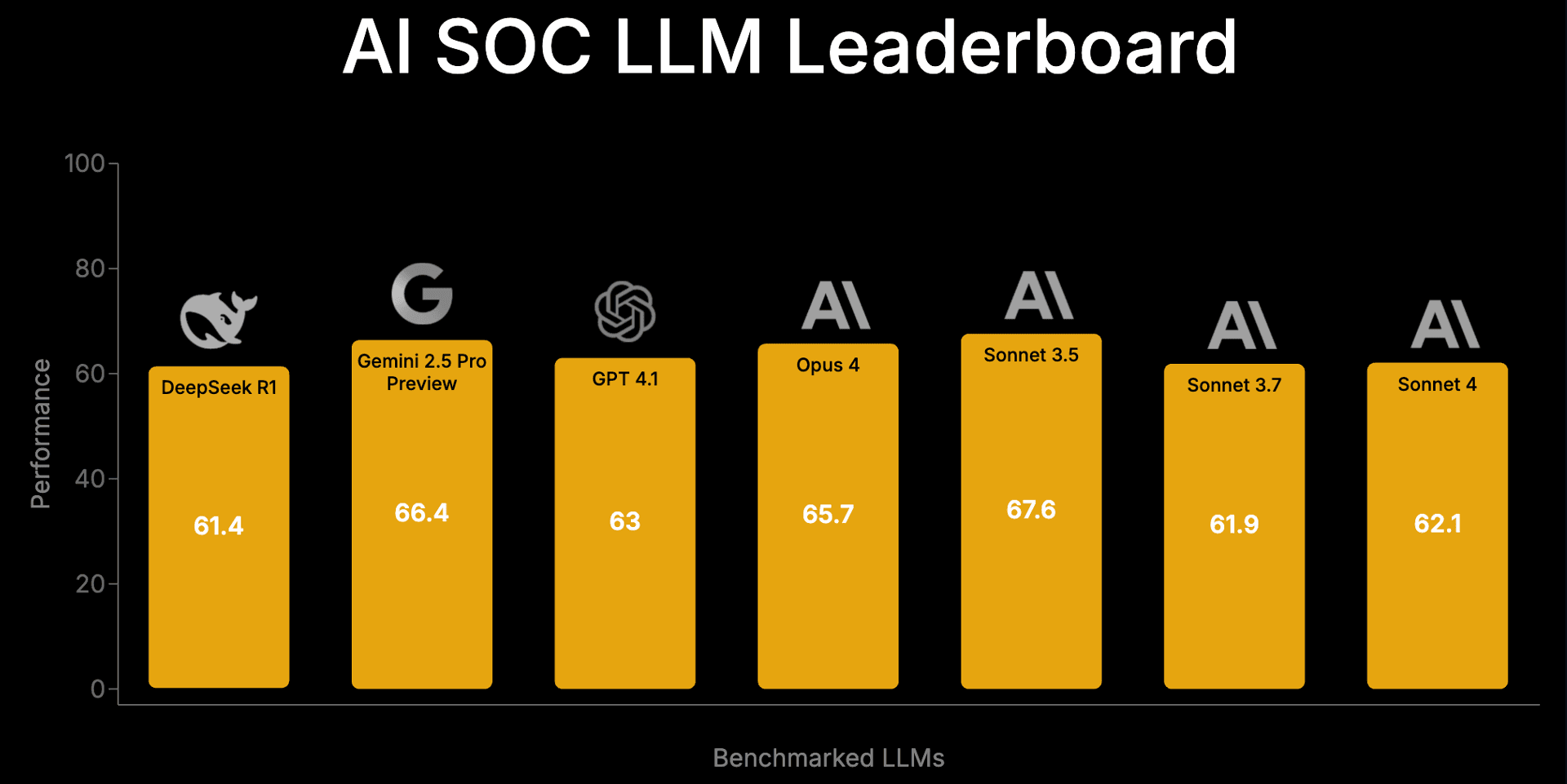

We benchmarked some of the most well-known and high-performing models available as of May 2025, including models from Anthropic, OpenAI, Google, and DeepSeek. And the results? Quite promising! All high-end models were able to complete over half of the investigation tasks, with performance ranging from 61% to 67%; for reference during the first AI SOC championship best human analysts powered by AI SOC were able to score in the range of 73% to 85%, while Simbian's AI Agent at extra effort settings reached the value of 72%. This suggests that LLMs are capable of much more than just summarizing and retrieving data; their capabilities extend to robust alert triage and tool use via API interactions.

One noteworthy finding highlights the importance of thorough prompt engineering and agentic flow engineering, which involves feedback loops and continuous monitoring. In initial runs, some models struggled, requiring improved prompts and fallback mechanisms for coding agents involved in analyzing retrieved data. This leads to a second key finding: AI SOC applications heavily lean on the software engineering capabilities of LLMs.

Perhaps most surprisingly, Sonnet 3.5 sometimes outperformed newer versions like Sonnet 3.7 and 4.0. We speculate this could be due to "catastrophic forgetting," where further domain specialization for software engineering or instruction following might detrimentally affect cybersecurity knowledge and investigation planning. This underscores the critical need for benchmarking to evaluate the fine-tuning of LLMs for specific domains.

Finally, we found that "thinking models" (those that use post-training techniques and often involve into internal self-talk) didn't show a considerable advantage in AI SOC applications, with all tested models demonstrating comparable performance. This resembles the findings of the studies on software bug fixing and red team CTF applications, which suggest that once LLMs hit a certain capability ceiling, additional inference leads to only marginal improvements, often at a higher cost. This points to the necessity of human-validated LLM applications in AI SOC and the continued development of fine-grained, specialized benchmarks for improving cybersecurity domain-focused reasoning.

The AI SOC LLM Leaderboard measures LLMs using Simbian's AI SOC Agent, a proven framework for using AI within the SOC. The AI SOC Agent is deployed at some of the largest SOCs in the world. Additionally, in a recent AI SOC Championship 2025 with over 100 analysts worldwide, the AI SOC Agent performed better than 95% of the participants in correctly investigating alerts with supporting evidence.

For the full details and results of the benchmarking, click here. We look forward to leveraging this benchmark to evaluate new foundation models on a regular basis, and plan to share our findings with the public. Stay tuned for a more in-depth presentation of our research methodology and findings at an upcoming cybersecurity conference.