Loading...

Loading...

Security operations can’t tolerate disruption. Too often a new security tool, particularly an automation tool, doesn’t deliver results. It wastes budget, erodes analyst confidence, spikes alert backlogs, and leaves your organization vulnerable

But organizations implementing AI SOC agents at scale are seeing very different results. They've figured out how to implement autonomous investigation and response without disrupting existing workflows, without replacing analyst teams, and without the months-long implementation timelines and unmet expectations that plague traditional security tools.

The biggest misconception about AI SOC agents is that they require you to rebuild your entire security infrastructure. That's false.

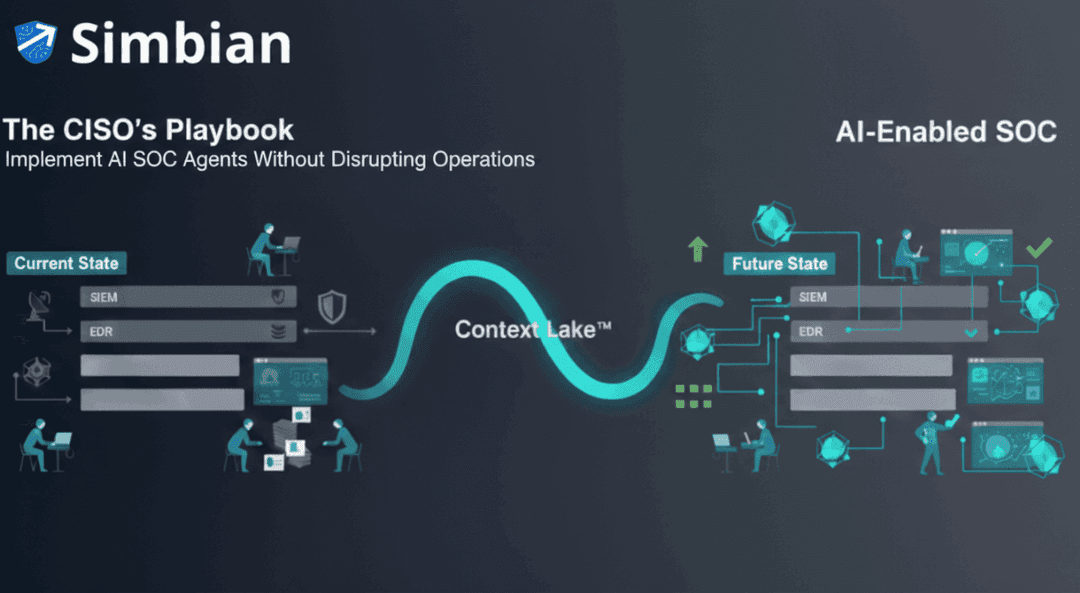

Your SIEM isn't going anywhere. Neither is your EDR, CDR, or threat intelligence platforms. These detection tools continue to generate alerts exactly as they do today. Detection engineering teams keep building and tuning rules. You don't replace that work—AI SOC agents consume it as fuel.

Your incident response processes remain unchanged. When an alert escalates to "true positive requiring action," it is routed to your incident response team, legal team, and communications team, precisely as it does now. Your systems of record—identity tools, CMDB, device management platforms—all continue functioning as authority sources for context.

AI SOC agents assume responsibility for what consumes 60-70 percent of analyst time: the middle layer of the TDR pipeline (Triage, Detection, Response).

L1 triage and alert prioritization: the work that currently has junior analysts clicking through 400 alerts per shift—becomes autonomous. AI SOC agents evaluate every alert, assign severity based on contextual reasoning, and immediately route critical threats to the appropriate level.

L2 investigation: evidence collection, log correlation, baseline comparison—runs at machine speed. Instead of analysts manually pulling data from five different tools, the AI investigates while analysts watch dashboards. 95% of L2 work is automated.

L3 deeper analysis: where senior analysts hunt for campaign indicators, correlate attack patterns across weeks of data, and identify relationships between seemingly unrelated alerts—shifts to AI systems that process thousands of alerts simultaneously. Campaign correlation that takes humans weeks becomes machine work.

Tier 1 analysts stop being alert processors. They become coaches. Instead of triaging 400 alerts, they review AI triage decisions, validate the confidence scoring, and provide feedback when the system gets it wrong. They validate 50 alerts per shift instead of processing 400.

The focus shifts: from "process alerts quickly" to "teach the AI what we care about." Analysts spend time describing what good investigations look like, explaining organizational context ("Yes, it's normal for John to log in from the Philippines on Thursdays"), and correcting the AI when it misunderstands intent.

Detection engineering becomes the primary responsibility of the analyst. With investigations automated, your best analysts migrate into detection engineering—improving detection rules, filling gaps in MITRE coverage, and shifting left on the attack timeline. This work matters more strategically than alert triage ever did.

Threat hunting moves from reactive (only happens when there's time) to proactive (happens constantly because analysts have freed time).

Your most senior analysts become AI quality assurance; ensuring the system understands your threat model, validating recommendations for edge cases, and training new models when your environment changes.

AI SOC agents don't arrive pre-trained on your organization. They come pre-trained on the global threat landscape. Your job is teaching them what normal looks like in your environment, what matters to your business, and how your team thinks about security decisions.

Four distinct knowledge-transfer mechanisms happen in parallel.

Your organizational memory lives in documents and in your staff. Pull together your existing playbooks, runbooks, standard operating procedures, and incident response frameworks. If you have documentation in Confluence, SharePoint, or GitHub, the AI SOC agent consumes it. Add the conversations that happen in tools like Slack and Team, as well as any other useful information that an experienced analyst would share with a new analyst. Historical incident reports and post-mortems have become training data.

As the AI investigates alerts alongside analysts, corrections occur in real time via chat interfaces. An analyst sees an investigation path and says, "That's not the right direction—look at the EDR data for execution context instead." The AI receives that feedback, adjusts its investigation pattern, and immediately applies the correction to similar alerts.

Analysts performing their usual investigation work while the AI observes create a robust training signal. The AI watches human decision-making patterns, sees which data sources analysts consult first, notices which contextual information changes investigative conclusions, and learns the sequence of investigative steps.

The AI SOC agent integrates with your identity systems to answer the "John in Russia" question. It pulls asset criticality rankings from your CMDB. It collects behavioral baselines for users and systems. It ingests threat intelligence and global attack pattern data.

This is automated integration, not manual configuration. The AI connects to your identity platform on day one and starts building baselines of what's normal.

In Simbian’s AI SOC Agent, all four types of contexts are consolidated into the Simbian Context Lake(tm). 75+ integrations are used to gather evidence across the institutional touchpoints, regardless of networks, devices, or tools. With every alert, datapoint, and resolution, the AI SOC Analyst becomes more intelligent and more prepared for the following alert, all without your SOC team lifting a finger or editing an outdated playbook.

Deploying AI SOC agents happens in phases. Each phase proves value before moving to the next, reducing risk and building analyst confidence.

The AI SOC agent runs in shadow mode. It processes 100 percent of your alerts—every single one—but takes zero autonomous actions. It doesn't suppress alerts. It doesn't modify severity. It doesn't execute any containment. It only investigates and reports.

The AI now handles initial triage and evidence collection. It presents investigation packages to L2 analysts—pre-assembled summaries with relevant logs, threat intelligence, behavioral context, and preliminary conclusions.

Success Metric: Aim for analysts to approve at least 85% of the AI's recommendations.

If that approval rate dips below 75%, you likely have a "context translation" issue. This means the AI understands the technical threat but is failing to grasp your organization's specific priorities or risk tolerance. When this happens, pause immediately and recalibrate the system to better align with your business goals before continuing.

The AI now fully handles low- and medium-severity alerts. It triages, investigates, correlates, and generates responses—all autonomously, with no analyst review required unless it flags something uncertain.

Automated containment actions are enabled for safe responses: suspend users, block IP addresses, and block file hashes. These are reversible actions that don't require human approval.

The AI handles all severity levels with human oversight becoming more advisory than mandatory. Escalation happens only for strategic decisions, novel attack patterns that don't match known frameworks, or situations where the AI flags uncertainty.

Humans retain final approval on high-stakes decisions, but efficiency transforms. Instead of the current 15–30-minute cycle, AI reduces total clock time to under 10 minutes. Crucially, the active analyst effort required for that review drops to less than one minute.

This phased approach reduces risk and proves value incrementally. Organizations consistently achieve 90%+ auto-resolution within 3 weeks of full deployment using this framework.

The question isn't whether to implement AI SOC agents; it's how fast you can get there. The AI SOC agent technology is mature. It works, and it can move your organization from one operational model to another while maintaining security, analyst confidence, and alert quality.

Experience these game-changing AI SOC capabilities with Simbian's autonomous agents. Book a demo to see Context Lake, graph-based reasoning, and multi-agent systems in action.