Loading...

Loading...

"Continuous compliance assessment." In other words, rather than a snapshot in time assessment measuring compliance with security policies (during which everyone gets a little more careful), you'd probably be better served continuously assessing policy compliance all the time.

You've heard of the idea. Some vendors have probably tried to sell it to you in some form or another. But in the real world, very few security programs manage to achieve it consistently at scale.

Can it be cost-effective, produce results, and not annoy every employee at your company? Perhaps. I'll propose a way to leverage AI to make some progress toward that goal. But first, I'm going to share a little secret:

I don't believe in it.

Or at least, not in the version of it most of the GRC world is peddling. I don't believe in policing the behavior of people whose jobs are not engineering or security focused. This excludes obviously negligent acts - ones that the average person should recognize without any security training whatsoever. Beyond that more egregious behavior, I challenge the status quo that "security is everyone's job" and believe the responsibility for achieving effective security posture must fall squarely on the shoulders of the security and engineering professions.

Post a radical idea like this on Reddit, and you'll soon hear the distressed taps of 10,000 keyboards, fire burning beneath the fingers of security professionals around the world, outraged that someone would dare utter such a thought in their direction. Everyone would rather blame users for failing to comply with policy.

I think it's time we take responsibility. Security is not everyone's job. It's mine. And that's one of the reasons I joined Simbian. To bring the much needed innovation into this space. To enable security operations powerful (and scalable) enough at a reasonable cost for employees to focus on...

Gasp

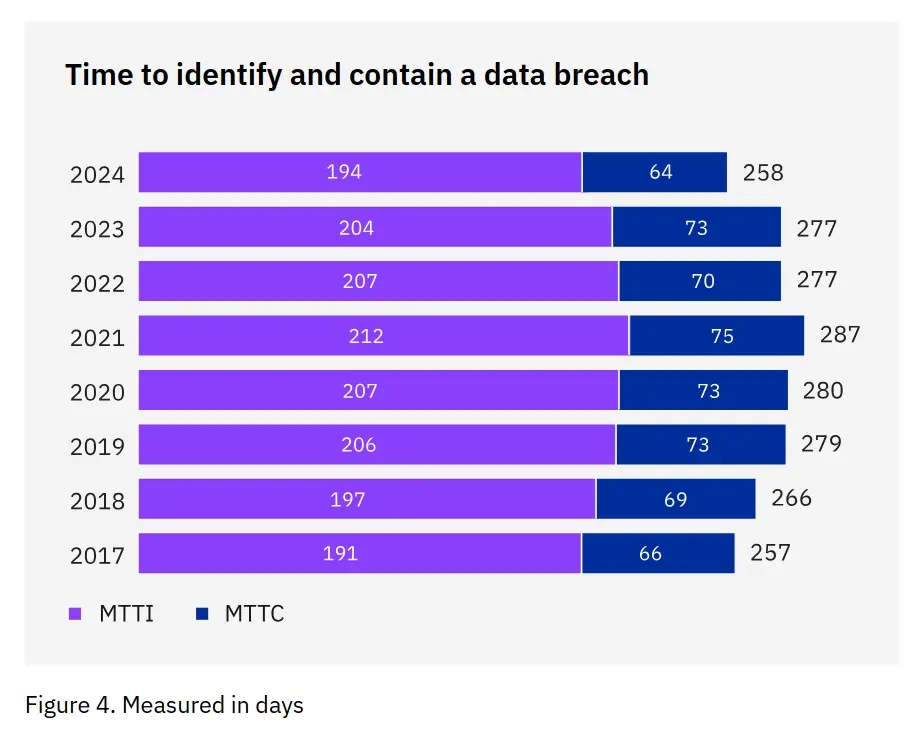

...their own jobs. People have work to do and should be able to do that work without knowing and following 101 different security policies. How long is the average Mean Time to Identify (MTTI)? 194 days according to 2024 Cost of a Data Breach report.

We can't manage to detect intruders for 194 days and we're blaming the hard worker in finance who fell for a phishing email when things go sideways? Give me a break.

Now, to be fair: GRC is far more than human behavior compliance. It's also compliance with what I like to call security-as-infrastructure policies. For example: Policies that dictate the company go fully passwordless (physical FIDO2 tokens preferably) so that worker in finance literally cannot have his/her password phished.

Now I've had this conversation before. I already know your retort. The cost! Those physical tokens/log retention periods/cold storage processes/privilege modification auditing/etc are costly!

And you're absolutely right. Which is why it's so critical that hiring a human who had the intelligence and patience to read and understand all those NIST SPs and ATT&CK framework and SANS certs (rare and therefore expensive) cannot be the only means with which to achieve robust GRC assessment and SOC analysis.

That's why I took on the really tough challenge of building AI powered SOC and GRC. Because yes, we do need to get way more analysis done without hiring more analysts. And we do need to continuously assess compliance in accordance with a whole lot of ISO 2700 series standards and NIST SP controls without hiring more GRC professionals. And we do need to cut some costs so our security programs can afford to implement some of those more expensive (and far more effective) security-as-infrastructure policies I'm so fond of, rather than putting the burden of security on non-security professionals.

"The security team can't be everywhere," we often say. But with AI, perhaps it can. AI analysts promise the outcome of scaling up execution of traditionally time consuming GRC tasks (audits of permission changes, for example) that were previously not feasible to have human analysts manually work on. Or even previously unimagined real-time audits, such as assessment of malicious emails or direct messages that have gotten past other filters, perhaps even lateral communications by compromised company accounts. These are just a few ways that the AI era promises to power up both GRC and SOC sides of security operations while potentially even achieving lower overall costs.

And the naysayers who believe AI in GRC and SOC workloads is a vendor cash grab with no real world upside, destined to become the next cybersecurity meme (looking at you Jonathan Rau and your recent 🔗 Point #3)... They have a point. Our industry is full of vendors making overblown claims about their Chat-GPT + RAG SOC-in-a-Box hacked together solutions to very hard problems. But there are also engineers like myself at Simbian backed by technically focused founders like Ambuj Kumar and I'll even throw a shout-out to a competitor of ours Leo Meyerovich with Graphistry, Inc.. Engineers who believe in producing evidence backed, highly technical results, inspired by a love for problem solving and progress in our fields.

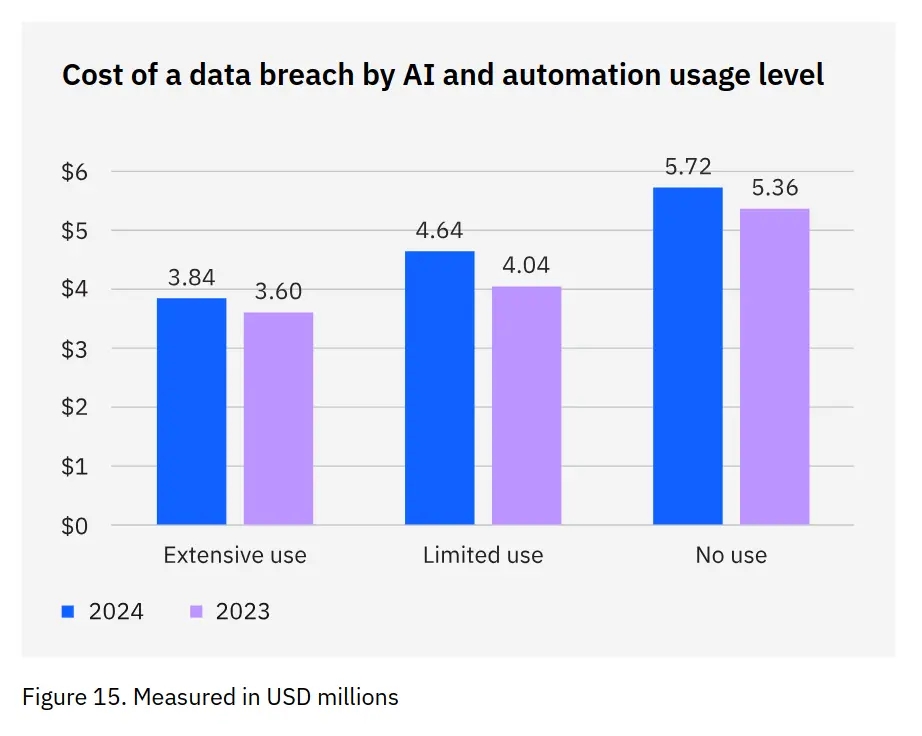

And to reference IBM's reported data once more: AI is already proving to generate a significant cost reduction when it comes to the cost of a breach.

"The more organizations used AI and automation, the lower their average breach costs. That correlation is striking and one of the key findings of this year’s report. Organizations not using AI and automation had average costs of USD 5.72 million, while those making extensive use of AI and automation had average costs of USD 3.84 million, a savings of USD 1.88 million." - IBM's Cost of a Data Breach Report 2024.

So there. I said it. I think the current generation of GRC is mostly fluff, and not because those doing the work want it that way. I think the passionate GRC professionals out there direly wish they could put better policies in place. Policies that are currently too expensive to implement at scale in many cases. Policies that would really make a difference. But their hands are tied. SOC analysts who would kill to have those logs to work with, but once again, it costs too much. Cheaper, more reliable analysis (both in the GRC and SOC spaces) powered by AI would turn your top 50% of analysts into supervisors, guiding legions of virtual analysts. Which in turn would free up budget headroom and analysis bandwidth to afford some of those security features and processes that would have previously been out of reach. Features and processes that will reshape the security landscape.

We're working on it. Stay tuned. Not for more marketing fluff. For technical demos and industry shifting technological progress. Not GPT-in-a-box venture capital cash grabs. The technology has major maturity gaps to cross, and any AI GRC / SOC company out there claiming they're the end-all-be-all today... I'd steer clear. No one has satisfactorily leveraged LLMs for security comprehensively yet. I believe at Simbian we will be, if not the first, the most robust technology stack out there in this space. But more importantly, I believe the generative AI revolution will in a few short years have drastically improved the capabilities of teams around the world - especially the ability of GRC pros to do their jobs more effectively.

This was a long post! It's a problem-set I'm deeply passionate about. If you're still reading, DM me "GRC with AI" and I'll show you the offering we've proven effective in real customer operations already. It's not finished, but it is progress.

So, to recap. AI in Governance, Risk, and Compliance: What Every CISO Needs to Know? AI-for-Security isn't ready for comprehensive AI SOC-in-a-box analysis nor compliance validation automation just yet. Anyone telling you otherwise is exaggerating. But it will be, and if you follow my posts, I'll deliver the technical details to prove it as we arrive there, one technical hurdle at a time.